Crawlability Issues on Your Website and Their Solutions

Crawlability issues refer to obstacles that hinder search engine bots from effectively navigating and indexing a website.

Common crawlability issues are broken links, server errors, poor URL structures, duplicate content, and more. Solutions include fixing broken links, resolving server errors, simplifying URL structures, unblocking essential resources in robots.txt, addressing duplicate content, optimizing page speed, and ensuring mobile compatibility, among others.

By addressing these issues, websites can improve SEO performance and visibility in search results.

Ensuring your website is crawlable is a fundamental aspect of SEO. Crawlability refers to the ability of search engine bots to access and navigate your website’s content.

If search engines cannot crawl your site effectively, your pages may not be indexed, leading to poor visibility in search results. Here, we explore 17 common crawlability issues and their solutions to help you optimize your website.

Common Crawlability Issues on Websites and Their Solutions

Crawlability issues can stem from various factors, including technical errors, poor site architecture, and incorrect configurations. Addressing these issues is crucial for improving your site’s SEO performance and ensuring that search engines can access and index your content efficiently.

Broken Links

Broken links, or dead links, lead to pages that no longer exist. These can disrupt the crawling process, causing search engines to encounter errors and potentially miss indexing valuable content.

Solution

Regularly audit your website using tools like Screaming Frog or Ahrefs to identify and fix broken links. Replace or remove any dead links, and ensure all links on your site point to live, relevant pages.

Server Errors

Server errors, such as 5xx errors, indicate issues with your website’s server, preventing crawlers from accessing your site. These errors can severely impact crawlability and indexing.

Solution

Monitor your site for server errors using Google Search Console. Work with your hosting provider to resolve server issues and ensure your server is reliable and responsive.

Redirect Chains and Loops

Redirect chains occur when a URL redirects to another URL, which then redirects to another URL, and so on. Redirect loops happen when URLs redirect back to themselves, creating an endless loop. Both issues can confuse crawlers and waste crawl budget.

Solution

Audit your site for redirect chains and loops using tools like Screaming Frog. Simplify your redirects to ensure they point directly to the final destination, and avoid unnecessary redirections.

Poor URL Structure

Complicated or non-descriptive URLs can hinder crawlability. URLs with excessive parameters, long strings, or unclear hierarchies can be difficult for crawlers to navigate.

Solution

Simplify your URL structure by using clear, concise, and descriptive URLs. Avoid using unnecessary parameters and ensure your URLs reflect the content and hierarchy of your site.

Blocked Resources

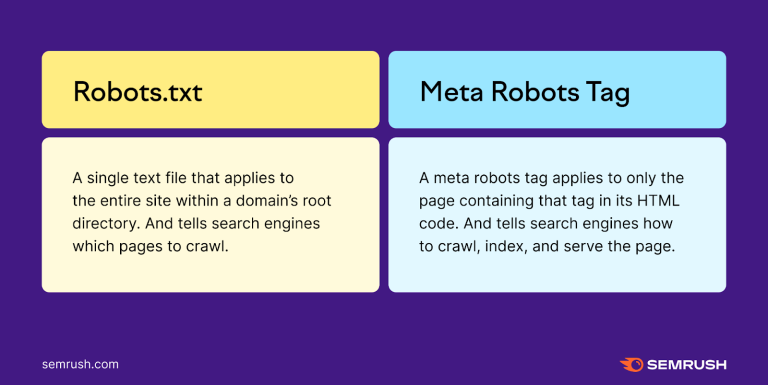

Blocking essential resources, such as CSS, JavaScript, or images, in your robots.txt file can prevent crawlers from accessing and rendering your pages correctly, affecting crawlability and indexing.

Solution

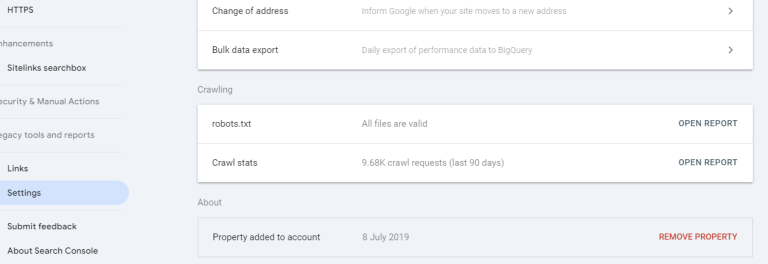

Review your robots.txt file to ensure you are not blocking essential resources. Use Google Search Console to test your robots.txt file and see how Googlebot renders your pages. Allow access to necessary resources for proper rendering and indexing.

Duplicate Content

Duplicate content can confuse search engines, leading to inefficient crawling and indexing. It can also dilute the SEO value of your pages, affecting your rankings.

Solution

Identify and resolve duplicate content issues using canonical tags, 301 redirects, or by consolidating duplicate pages. Ensure each page on your site offers unique, valuable content.

Thin Content

Pages with thin content, or insufficient information, may be crawled and indexed less frequently. Search engines prioritize content-rich pages that provide value to users.

Solution

Enhance thin content by adding more relevant and informative content. Ensure each page addresses the needs and queries of your target audience, providing comprehensive and valuable information.

Incorrect Use of Robots.txt

Misconfigured robots.txt files can inadvertently block search engine crawlers from accessing important sections of your site, affecting crawlability and indexing.

Solution

Carefully configure your robots.txt file to allow crawlers access to all essential areas of your site. Use tools like Google Search Console to test your robots.txt file and ensure it’s not blocking valuable content.

Slow Page Load Speed

Slow-loading pages can hinder crawl efficiency, as search engine bots have a limited crawl budget. Pages that take too long to load may be skipped during the crawling process.

Solution

Optimize your website’s performance by improving page load speed. Use tools like Google PageSpeed Insights to identify and fix issues affecting your site’s speed, such as optimizing images, leveraging browser caching, and minifying CSS and JavaScript.

Flash Content

Flash content is not supported by most search engines and can hinder crawlability. Pages relying on Flash may not be indexed properly, affecting visibility.

Solution

Replace Flash content with HTML5 or other modern, search-engine-friendly technologies. Ensure your site’s content is accessible and crawlable by using standard web technologies.

Orphan Pages

Orphan pages are pages on your site that are not linked to from any other page. These pages can be difficult for crawlers to find, leading to poor indexing.

Solution

Ensure all important pages on your site are linked from other pages. Use internal linking strategies to connect orphan pages to the rest of your site, improving crawlability and navigation.

Sitemap Issues

Incorrect or outdated sitemaps can misguide search engines, leading to inefficient crawling and indexing. Missing or incomplete sitemaps can also prevent crawlers from discovering all your pages.

Solution

Regularly update and submit your XML sitemap to search engines. Ensure your sitemap includes all important pages and accurately reflects your site’s structure. Use tools like Google Search Console to monitor and fix sitemap issues.

Dynamic URLs

Dynamic URLs with complex parameters can confuse search engines and lead to inefficient crawling. These URLs can create duplicate content issues and waste crawl budget.

Solution

Use URL parameters sparingly and consider using static, descriptive URLs instead. Implement canonical tags to consolidate dynamic URLs and prevent duplicate content issues.

Missing or Incorrect Canonical Tags

Missing or incorrect canonical tags can cause duplicate content issues, leading to inefficient crawling and indexing. This can dilute the SEO value of your pages.

Solution

Implement canonical tags correctly to indicate the preferred version of a page. Ensure all duplicate or similar pages point to the canonical version, helping search engines index the right content.

Unoptimized Mobile Experience

With mobile-first indexing, a poor mobile experience can affect crawlability and indexing. Mobile pages that are not optimized can hinder search engines from crawling your site effectively.

Solution

Optimize your website for mobile devices by using responsive design, improving load speed, and ensuring all content is accessible on mobile. Use Google’s Mobile-Friendly Test to identify and fix mobile usability issues.

JavaScript Navigation

Navigation menus and links powered solely by JavaScript can hinder crawlability, as some search engine bots may struggle to crawl and index JavaScript-heavy content.

Solution

Ensure your navigation is accessible to crawlers by using HTML links in addition to JavaScript. Use progressive enhancement techniques to provide a basic, crawlable structure that works without JavaScript.

Crawl Budget Wastage

Crawl budget wastage occurs when search engine bots spend too much time crawling low-value or unimportant pages, leading to inefficient use of the crawl budget.

Solution

Prioritize high-value pages for crawling by optimizing your site structure and internal linking. Use the robots.txt file to block low-value pages from being crawled, and regularly update your sitemap to guide crawlers to important content.

Summary of Key Points

Addressing crawlability issues is essential for improving your website’s SEO performance. By resolving common problems such as broken links, server errors, poor URL structures, and duplicate content, you can enhance the efficiency of search engine crawlers and ensure your content is properly indexed.

Final Thoughts on Crawlability Optimization

Regular audits, careful configuration of technical elements, and continuous monitoring are key to maintaining optimal crawlability. By implementing the solutions provided, you can enhance your website’s visibility in search results and drive more organic traffic to your site.