What Is the Difference Between Crawling And Indexing?

Crawling involves search engine bots discovering web pages, while indexing entails analyzing and storing these pages for retrieval in search results. In short, crawling discovers web pages; indexing categorizes and stores them for retrieval in search results.

Understanding and optimizing these processes are crucial for maximizing site visibility and search engine rankings.

Mastering SEO requires a clear grasp of crawling and indexing—two pivotal processes that shape how search engines interact with your website. This guide explores their distinctions, impact on SEO, and essential strategies for optimization.

Key Takeaways:

- Crawling vs. Indexing: Crawling is the process of discovering web pages, while indexing involves storing and organizing this content for retrieval.

- SEO Impact: Efficient crawling ensures all pages are found, while successful indexing determines if they appear in search results.

- Optimization Strategies: Clear site structure, effective internal linking, and proper HTML usage are key to enhancing both crawling and indexing.

Difference Between Crawling and Indexing

Optimizing your website for search engines requires a thorough understanding of how search engines work. Two fundamental processes in SEO are crawling and indexing.

Though often used interchangeably, these processes are distinct, each playing a critical role in how your website appears in search engine results.

This article delves into the differences between crawling and indexing, their significance, and how they impact your site’s SEO performance.

Search engines like Google use automated bots, known as crawlers or spiders, to discover and analyze web content. Understanding the difference between crawling and indexing is crucial for effective SEO strategy.

Both processes are integral to how search engines compile their databases of web content, but they serve different purposes.

What is Crawling?

Definition of Crawling

Crawling is the process by which search engine bots systematically browse the internet to discover new and updated web pages. These bots start from a list of known URLs and follow links from these pages to find new content. This process is akin to a librarian searching through shelves to discover new books.

How Crawling Works

Crawlers like Googlebot follow a set of rules to determine which pages to visit, how often to revisit them, and how many pages to fetch from a site. These bots read the site’s code and navigate through links to find new URLs. Crawling is a continuous process, ensuring that search engines have the most up-to-date content.

Importance of Crawling in SEO

Effective crawling is essential for ensuring that search engines can find and access your website’s content. If crawlers cannot discover your pages, those pages cannot be indexed, meaning they won’t appear in search results.

Optimizing your site for crawling involves creating a clear and logical site structure, maintaining clean URLs, and ensuring that your site is free from errors that might block crawlers.

What is Indexing?

Definition of Indexing

Indexing is the process by which search engines store and organize the content discovered during crawling. Once a page is crawled, the search engine analyzes its content and adds it to its index—a massive database of web pages. This index is used to retrieve information quickly when users perform a search.

How Indexing Works

After a crawler finds a page, the content of that page is parsed and analyzed. This includes reading the text, examining the meta tags, assessing the relevance of keywords, and understanding the page’s overall context.

The indexed information is then stored in the search engine’s database, categorized by relevant keywords and topics.

Importance of Indexing in SEO

Indexing is critical for your site’s visibility in search results. If a page is not indexed, it will not appear in search engine results pages (SERPs), regardless of its content’s relevance or quality.

Ensuring that your pages are indexable involves using proper HTML tags, avoiding duplicate content, and utilizing sitemaps to guide search engines to your most important pages.

Key Differences Between Crawling and Indexing

Purpose

Crawling is about discovery. It involves search engine bots finding new and updated pages on the internet. Indexing, on the other hand, is about storage and retrieval. It involves adding the discovered content to the search engine’s database so it can be retrieved and displayed in response to user queries.

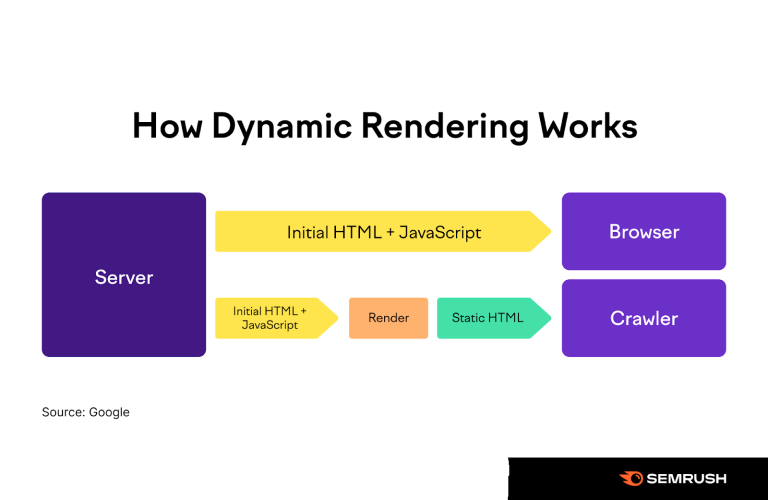

Process

During crawling, bots navigate through the web, following links to find new content. This process does not involve deep analysis of the content itself. Indexing involves analyzing and categorizing the content found during crawling, determining its relevance to various search queries, and storing it in a structured manner.

Impact on SEO

Both processes are vital for SEO but affect it in different ways. Effective crawling ensures that all your site’s pages are discovered by search engines. Successful indexing ensures that these pages are stored and can be retrieved during searches. Without crawling, pages cannot be found. Without indexing, found pages cannot appear in search results.

Enhancing Crawling and Indexing for SEO

Optimizing for Crawling

- Clear Site Structure: Ensure your site has a logical, hierarchical structure that makes it easy for crawlers to navigate.

- Internal Linking: Use internal links to help crawlers find all your important pages.

- XML Sitemaps: Submit a sitemap to search engines to guide crawlers to all your significant content.

- Robots.txt File: Properly configure your robots.txt file to manage which pages you want to be crawled.

Optimizing for Indexing

- Quality Content: Ensure your content is valuable, unique, and relevant to your audience.

- Proper HTML Tags: Use appropriate meta tags, headers, and alt text to help search engines understand your content.

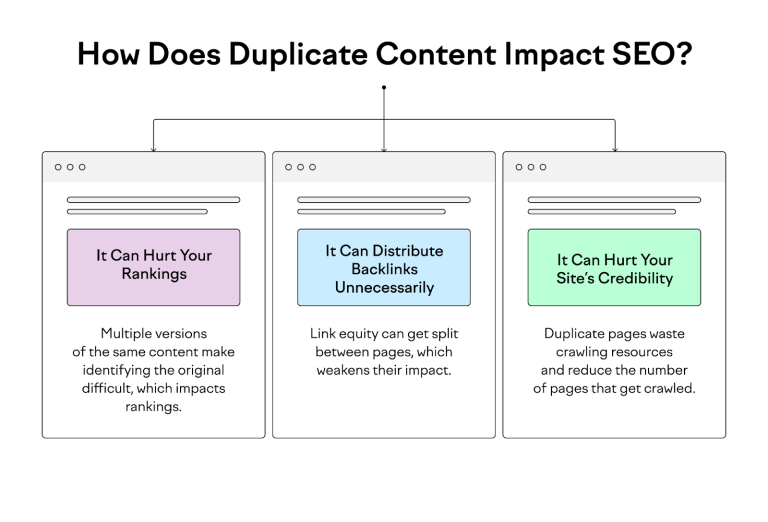

- Avoid Duplicate Content: Ensure that each page on your site offers unique content to avoid indexing issues.

- Sitemaps and Canonical Tags: Use sitemaps and canonical tags to prevent duplicate content problems and ensure search engines index the correct pages.

Common Crawling and Indexing Issues

Crawling Issues

- Broken Links: These can prevent crawlers from discovering your content.

- Server Errors: Frequent server errors can block crawlers from accessing your site.

- Poor Navigation: Complicated or unclear navigation can hinder crawlers from finding all your pages.

Indexing Issues

- Duplicate Content: This can confuse search engines and lead to indexing problems.

- Noindex Tags: Pages with noindex tags won’t be indexed, which can be problematic if applied to important content.

- Thin Content: Pages with little valuable content may be indexed but not ranked well.

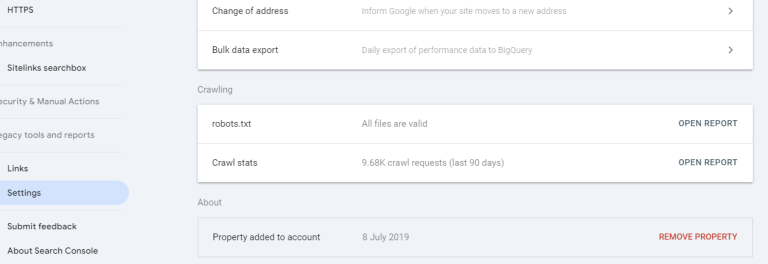

Tools for Monitoring Crawling and Indexing

Google Search Console

Google Search Console is an essential tool for monitoring how Google crawls and indexes your site. It provides insights into crawling errors, indexing status, and overall site performance. Regularly checking Google Search Console helps you identify and fix issues promptly.

Screaming Frog SEO Spider

Screaming Frog SEO Spider is a powerful tool for auditing your site’s crawlability and indexability. It simulates a search engine crawler, identifying broken links, duplicate content, and other issues that might affect how your site is crawled and indexed.

Ahrefs Site Audit

Ahrefs Site Audit provides comprehensive analysis of your site’s health, including crawl and indexing issues. It offers actionable insights to help you optimize your site for better crawling and indexing performance.

FAQs

What is the primary difference between crawling and indexing?

Crawling is the process by which search engine bots discover new and updated pages on the web. Indexing is the subsequent process of analyzing and storing this content in the search engine’s database for retrieval during searches.

How can I ensure my site is crawled efficiently?

Ensure your site has a clear structure, use internal linking effectively, submit an XML sitemap to search engines, and configure your robots.txt file properly to manage crawling.

What factors affect whether a page is indexed?

Factors include the quality and relevance of the content, proper use of HTML tags, absence of duplicate content, and correct use of sitemaps and canonical tags.

Why might a page not be indexed even if it’s crawled?

A page might not be indexed if it has low-quality content, duplicate content, noindex tags, or if it doesn’t meet the search engine’s quality guidelines.

What tools can help monitor crawling and indexing?

Google Search Console, Screaming Frog SEO Spider, and Ahrefs Site Audit are excellent tools for monitoring and optimizing crawling and indexing.

Can crawling issues affect indexing?

Yes, if search engine crawlers cannot find or access your pages due to crawling issues, those pages cannot be indexed, impacting your site’s visibility in search results.

Conclusion

Summary of Key Points

Crawling and indexing are fundamental processes in SEO. Crawling involves the discovery of new and updated content by search engine bots, while indexing involves analyzing and storing this content for retrieval in search results. Both processes are essential for ensuring your site’s visibility and ranking in search engines.