How to Leverage Google’s Robots.txt Tester for Better Crawlability?

The Google Robots.txt Tester is a tool offered by Google Search Console to test and validate the syntax of a robots.txt file. This file is used by websites to communicate with search engine crawlers like Googlebot, instructing them on which pages to crawl and index, and which to avoid.

The tester checks if the file is correctly formatted, identifying errors and providing suggestions for improvement. By using this tool, web developers can ensure their robots.txt file is effective in controlling search engine crawling and indexing.

What is a Robots.txt File?

Definition and Purpose

A Robots.txt file is a text file located at the root of your website that tells search engine crawlers which pages or sections of your site they can or cannot access. By specifying allowed and disallowed paths, you can manage which parts of your site are indexed by search engines.

How Robots.txt Affects Crawlability

Robots.txt plays a critical role in crawlability by directing crawlers to the most important parts of your site and away from less significant areas. This helps ensure that your crawl budget is used efficiently, prioritizing the indexing of high-value content.

Google’s Robots.txt Tester

Google’s Robots.txt Tester is a feature within Google Search Console that allows webmasters to check the validity of their Robots.txt files. The tool helps identify and resolve issues, ensuring that the directives in the file are correctly implemented and understood by Googlebot.

Benefits of Using Google’s Robots.txt Tester

Using the Robots.txt Tester provides several benefits:

- Error Identification: Quickly identify syntax errors or misconfigurations.

- Directive Verification: Ensure that your directives are being followed as intended.

- Improved Crawlability: Optimize your Robots.txt file to enhance overall site crawlability.

How to Access and Use Google’s Robots.txt Tester

Step-by-Step Guide to Accessing the Tool

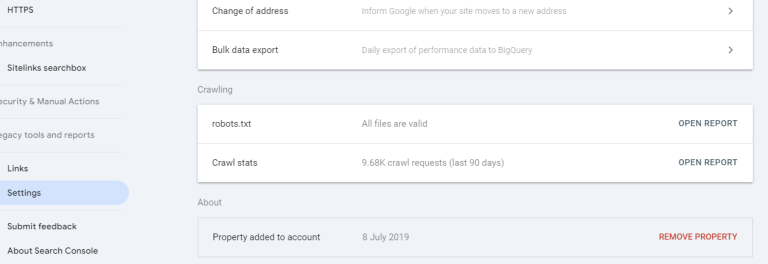

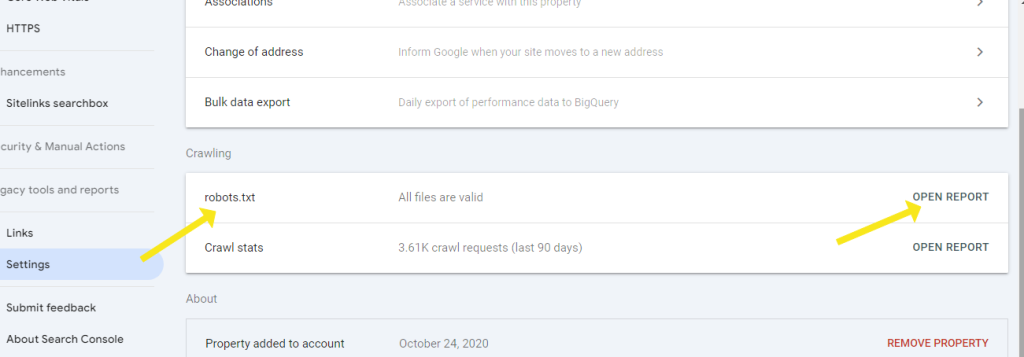

- Log in to Google Search Console: Navigate to your property in Google Search Console.

- Select the Robots.txt Tester: Under the “Crawl” section, find and click on “Robots.txt Tester.”

- View and Edit Your Robots.txt File: The tool displays your current Robots.txt file and allows you to make edits directly.

Basic Features of the Robots.txt Tester

The Robots.txt Tester provides several features:

- Syntax Highlighting: Helps identify errors and directives within the file.

- Test URL Paths: Allows you to test specific URLs against your Robots.txt rules to see if they are blocked or allowed.

- Preview Changes: View the impact of your changes before saving them.

Creating and Editing a Robots.txt File

Best Practices for Writing a Robots.txt File

When creating or editing your Robots.txt file, follow these best practices:

- Disallow Unnecessary Pages: Block pages that are not valuable for SEO, such as admin areas or duplicate content.

- Allow Important Pages: Ensure that key pages, such as product listings or main content, are not blocked.

- Use Specific Directives: Be precise with your rules to avoid unintentional blocking of important content.

Common Directives Used in Robots.txt Files

Some common directives include:

- User-agent: Specifies which crawlers the rule applies to (e.g., User-agent: Googlebot).

- Disallow: Blocks access to specific pages or directories (e.g., Disallow: /private/).

- Allow: Overrides a disallow rule for a specific path (e.g., Allow: /public/page.html).

- Sitemap: Provides the location of your sitemap (e.g., Sitemap: http://www.example.com/sitemap.xml).

Testing Your Robots.txt File with Google’s Tool

Conducting a Robots.txt Test

To test your Robots.txt file:

- Enter a URL Path: Input the path you want to test in the Robots.txt Tester.

- Run the Test: Click “Test” to see if the URL is blocked or allowed.

- Review Results: The tool will indicate whether the URL is allowed or blocked based on your Robots.txt rules.

Interpreting Test Results

The test results will show either “Allowed” or “Blocked” for the entered URL path. If a URL is incorrectly blocked, adjust your Robots.txt file accordingly and retest until the desired outcome is achieved.

Troubleshooting Common Issues

Identifying and Fixing Syntax Errors

Syntax errors are common issues in Robots.txt files. These can include missing colons, incorrect spacing, or unsupported directives. The Robots.txt Tester highlights these errors, making it easier to identify and correct them.

Resolving Conflicts in Directives

Conflicting directives can cause confusion for crawlers. For example, if a URL is both allowed and disallowed, it can create indexing issues. Review and simplify your rules to ensure clarity and prevent conflicts.

FAQs about Google’s Robots.txt Tester

What is Google’s Robots.txt Tester and how does it work?

Google’s Robots.txt Tester is a tool in Google Search Console that helps webmasters check and validate their Robots.txt files, ensuring that the directives are correctly implemented and understood by Googlebot.

How can I access Google’s Robots.txt Tester?

You can access the Robots.txt Tester through Google Search Console by navigating to the “Crawl” section and selecting “Robots.txt Tester.”

What are common mistakes when using Robots.txt files?

Common mistakes include syntax errors, conflicting directives, and over-blocking or under-blocking important pages.

How can Google’s Robots.txt Tester improve my site’s SEO?

By identifying and resolving issues with your Robots.txt file, the Tester helps improve crawlability, ensuring that important pages are indexed and enhancing overall SEO performance.

What are the best practices for writing a Robots.txt file?

Best practices include using clear and specific directives, avoiding unnecessary blocking, and regularly auditing the file to ensure it remains effective.

How often should I audit my Robots.txt file?

Regular audits should be conducted, especially after significant changes to your site’s structure or content, to ensure that the Robots.txt file continues to serve its intended purpose effectively.

Conclusion

Google’s Robots.txt Tester is an invaluable tool for optimizing your site’s crawlability and ensuring effective SEO performance. By understanding how to create and manage a Robots.txt file, testing it with Google’s tool, and continuously monitoring and auditing its effectiveness, you can enhance your site’s visibility and ranking in search engine results.