How to Use Crawl Stats to Improve Your Site’s SEO

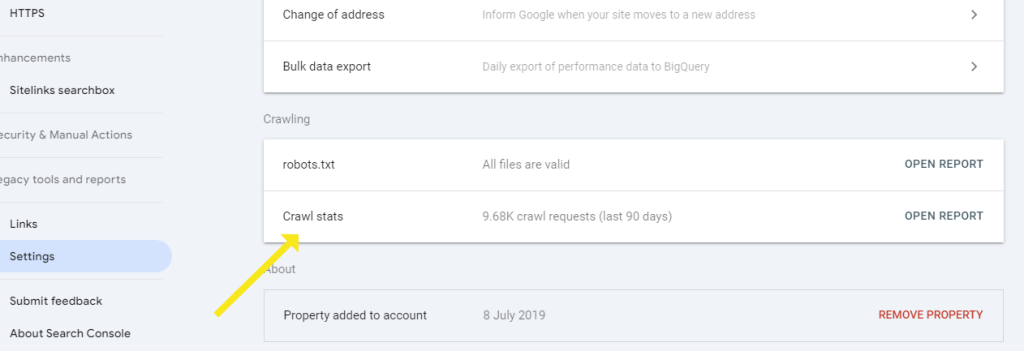

Google Search Console is a free tool that provides valuable insights into a website’s visibility and search performance, including how well it’s indexed and the search queries driving traffic.

Crawl stats within this tool reveal the health and efficiency of a website’s interaction with Google’s crawler. Frequent crawls suggest good content health, while infrequent ones may indicate issues.

Analyzing crawl stats helps visualize indexed pages, monitor performance, and address issues like server response time and mobile usability.

Optimizing based on these stats involves improving content accessibility, server response, and internal linking.

Regular review of hosting plans, caching strategies, and content updates ensures prompt crawling of fresh content. Continuous adaptation based on crawl data is vital for maintaining and enhancing search performance.

Understanding Crawl Stats

What Are Crawl Stats?

Crawl stats are data points that indicate how often and how thoroughly search engine bots are scanning your website. These statistics include information on the number of pages crawled, the amount of data downloaded, and the time spent downloading pages.

These metrics help webmasters understand how search engines are accessing their site and identify areas for improvement.

Why Crawl Stats Matter for SEO

Crawl stats are crucial because they reflect how search engines perceive your website. If search engines struggle to crawl your site, it can lead to poor indexing, which in turn affects your site’s visibility in search results.

Analyzing crawl stats helps you identify and fix issues that may hinder search engines from effectively indexing your site.

Accessing Crawl Stats

Using Google Search Console

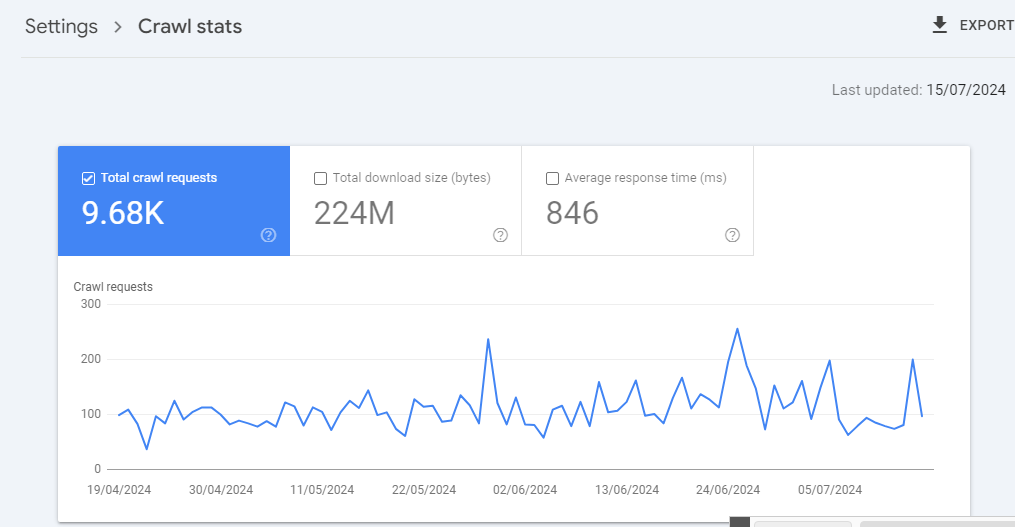

Google Search Console is an essential tool for accessing crawl stats. It provides detailed reports on crawl activity, highlighting any issues that might affect your site’s SEO. To access crawl stats, navigate to the “Crawl Stats” report under the “Settings” menu. This report shows how many requests were made by Googlebot, the total download size, and the time spent downloading your pages.

Third-Party Tools for Crawl Stats Analysis

Several third-party tools, such as Screaming Frog, Ahrefs, and SEMrush, offer additional insights into crawl stats. These tools can provide more granular data and help cross-reference findings with other SEO metrics, offering a comprehensive view of your site’s crawlability and performance.

Key Metrics in Crawl Stats

Crawl Requests

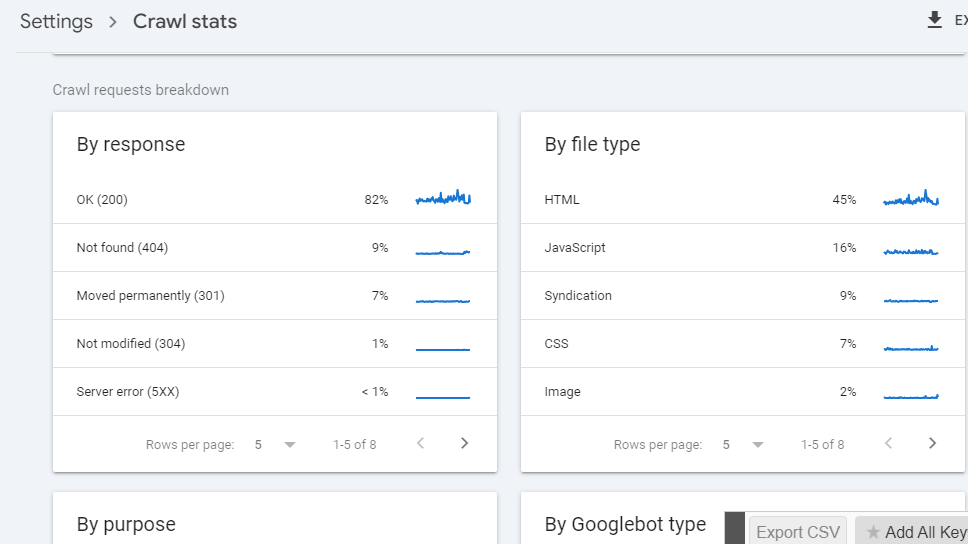

The number of crawl requests indicates how often search engines visit your site. A high number of crawl requests generally suggests good crawlability, but if the crawl requests are concentrated on less important pages, it may indicate a need for optimization.

Downloaded Kilobytes

This metric shows the amount of data downloaded by search engine bots. Large amounts of data might suggest issues like oversized images or unoptimized code, which can slow down the crawling process and affect user experience.

Time Spent Downloading a Page

This metric indicates the time search engines take to download a page. Long download times can be a sign of performance issues, which might hinder efficient crawling and negatively impact your SEO.

Analyzing Crawl Stats for SEO Improvement

Identifying Crawl Patterns

Regularly analyzing crawl stats helps identify patterns in how search engines interact with your site. Look for trends over time to understand if certain changes to your site impact crawl behavior positively or negatively.

Recognizing Crawl Spikes and Dips

Sudden spikes or dips in crawl activity can indicate issues that need immediate attention. Spikes might be caused by sudden increases in content or technical issues, while dips might suggest that search engines are having difficulty accessing your site.

Assessing Crawl Budget Utilization

Crawl budget refers to the number of pages search engines will crawl on your site within a given timeframe. Effective utilization of your crawl budget ensures that important pages are crawled and indexed regularly, improving your site’s SEO.

Optimizing Site Structure

Importance of a Logical Site Structure

A well-organized site structure makes it easier for search engines to crawl and index your pages. Ensure your site follows a logical hierarchy, with clear paths from the homepage to deeper pages.

Creating a Hierarchical URL Structure

Organize your URLs in a hierarchical manner to reflect the structure of your site. This helps search engines understand the relationship between different pages and prioritize crawling more important content.

Improving Internal Linking

Internal links help search engines discover new content and understand your site’s structure. Use descriptive anchor text and link to important pages from various parts of your site to improve crawlability.

Enhancing Site Performance

Page Speed and Its Impact on Crawl Stats

Page speed is a critical factor for both user experience and crawlability. Slow-loading pages can hinder search engine bots from efficiently crawling your site, leading to incomplete indexing and lower rankings.

Tools to Measure and Improve Page Speed

Use tools like Google PageSpeed Insights, GTmetrix, and Pingdom to measure your site’s speed performance. These tools provide actionable recommendations, such as optimizing images, leveraging browser caching, and minimizing CSS and JavaScript files.

Managing Crawl Budget

What is Crawl Budget?

Crawl budget is the number of pages a search engine will crawl on your site within a specified period. This budget is influenced by factors such as the number of pages on your site, the quality of your content, and your site’s performance.

How to Optimize Crawl Budget

Prioritize crawling important pages by ensuring your sitemap is up-to-date, using robots.txt to block low-value pages, and avoiding duplicate content. Regularly update and add new, high-quality content to maintain an efficient crawl budget.

Managing Low-Value Pages

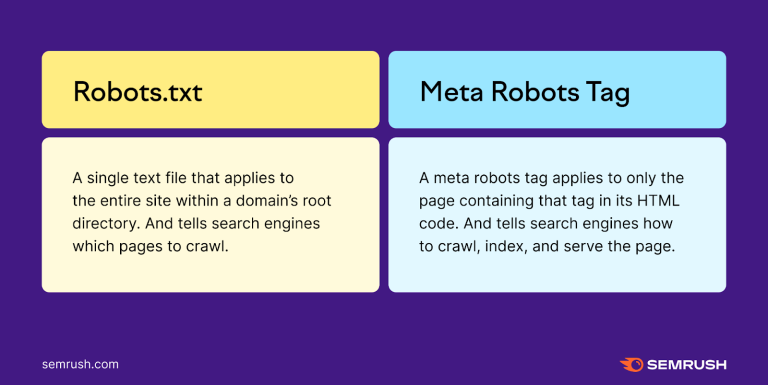

Identify and manage low-value pages that do not contribute to your SEO goals. This includes duplicate content, thin content pages, and pages with little or no SEO value. Use robots.txt and noindex tags to prevent search engines from wasting crawl budget on these pages.

Fixing Crawl Errors

Common Crawl Errors

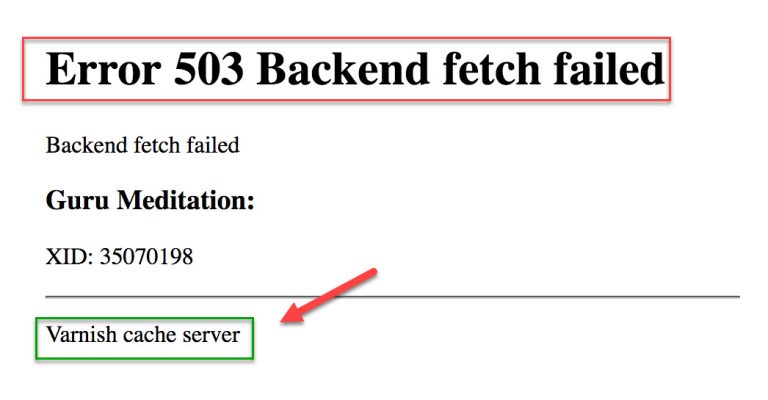

Common crawl errors include 404 not found errors, server errors, and DNS issues. These errors can prevent search engines from accessing your pages, negatively impacting crawlability and SEO.

Tools to Identify and Resolve Crawl Errors

Google Search Console provides detailed reports on crawl errors. Use this tool to identify and fix errors promptly. Third-party tools like Screaming Frog and Ahrefs can also help in diagnosing and resolving crawl errors.

Improving Mobile Crawlability

Mobile Optimization Strategies

With the increasing importance of mobile-first indexing, ensuring your site is mobile-friendly is crucial for crawlability. Use responsive design, optimize for mobile speed, and ensure all content is accessible on mobile devices.

Testing Mobile-Friendliness

Tools like Google’s Mobile-Friendly Test can help evaluate your site’s mobile performance. These tools provide insights into issues affecting mobile usability and offer recommendations for improvement.

Using Sitemaps and Robots.txt

Creating and Submitting an XML Sitemap

An XML sitemap helps search engines discover and index your content more efficiently. Ensure your sitemap is up-to-date and submit it to Google Search Console and other search engines.

Optimizing Robots.txt for Better Crawlability

Proper configuration of the robots.txt file is crucial for guiding search engines on which pages to crawl or avoid. Ensure that important pages are not accidentally blocked and that low-value pages are excluded from the crawl.