Managing Javascript Content To Ensure Effective Crawling

What is JavaScript Content?

JavaScript content refers to the dynamic and interactive elements that are added to web pages using the JavaScript programming language.

JavaScript content is essential for creating dynamic and interactive web experiences, but it requires careful management to ensure effective crawling and indexing by search engines.

Here are the key points about JavaScript content:

- Interactive Elements: JavaScript is used to create interactive elements such as animations, form validation, and dynamic content updates on web pages.

- Client-Side Execution: JavaScript runs on the user’s web browser, enhancing the functionality and interactivity of web pages without the need for constant server communication.

- Dynamic Content: JavaScript allows developers to manipulate the Document Object Model (DOM) and respond to user interactions, making web pages more engaging and user-friendly.

- Server-Side Use: While primarily used on the client-side, JavaScript can also be executed on the server-side using platforms like Node.js, enabling server-side applications and interactions with databases.

- Crawlability Issues: JavaScript content can pose challenges for search engines, as they need to render the page to fully understand the content. This can lead to delays in indexing and crawling.

Managing Javascript Content To Ensure Effective Crawling

Managing JavaScript content to ensure effective crawling involves several strategies to make dynamic and JavaScript content accessible to search engine crawlers.

Here are some key approaches:

1. Progressive Enhancement

- Design a web page that works with or without JavaScript: Provide essential content and functionality in the initial HTML document. This ensures that search engines can access and crawl the basic content even if JavaScript is not executed.

2. Prerendering

- Generate static HTML snapshots: Use services or tools to create static HTML versions of web pages with JavaScript executed. This allows search engines to crawl and index the content as if it were static HTML.

3. Dynamic Rendering

- Server-side solutions: Utilize server-side solutions that detect whether the request comes from a user or a bot. Serve the appropriate content based on the request type, ensuring that search engines can crawl and index the content correctly.

4. Structured Data

- Add JSON-LD or microdata markup: Use structured data to provide additional information about your web page content. This helps search engines better understand the content and can enhance your web page’s visibility and relevance in search results.

5. Internal Linking

- Use internal links effectively: Ensure that internal links are contained in <a> HTML elements with Href attributes. This makes them discoverable and crawlable by search engines.

6. Testing and Optimization

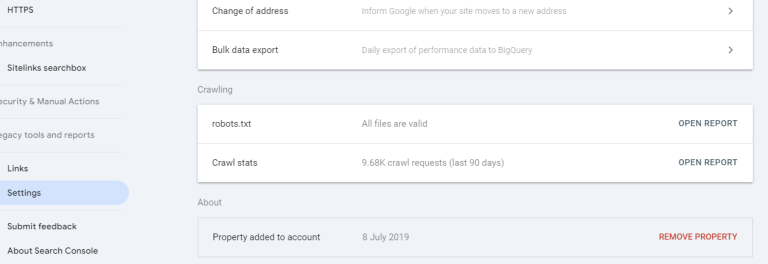

- Use tools like Google Search Console: Utilize the URL Inspection tool to check the status, coverage, and issues of any URL on your website. Additionally, use the Mobile-Friendly Test tool to ensure your web page performs well on mobile devices.

- Lighthouse tool in Chrome DevTools: Audit and measure the performance, accessibility, best practices, and SEO of your web page.

- Fetch as Google tool in Bing Webmaster Tools: Check how Bing crawls and renders your web page.

7. Website Structure

- Clear and logical structure: Organize your website with a clear hierarchy and logical link structure to facilitate easy navigation by search engines.

8. Site Speed

- Optimize site speed: Improve load times and overall site speed, as slower sites can hinder crawlability.

9. Robots.txt Files

- Properly configure robots.txt files: Direct search engine bots on which pages to crawl or ignore. Ensure that robots.txt files do not block search engines from accessing important content.

10. Regular Audits

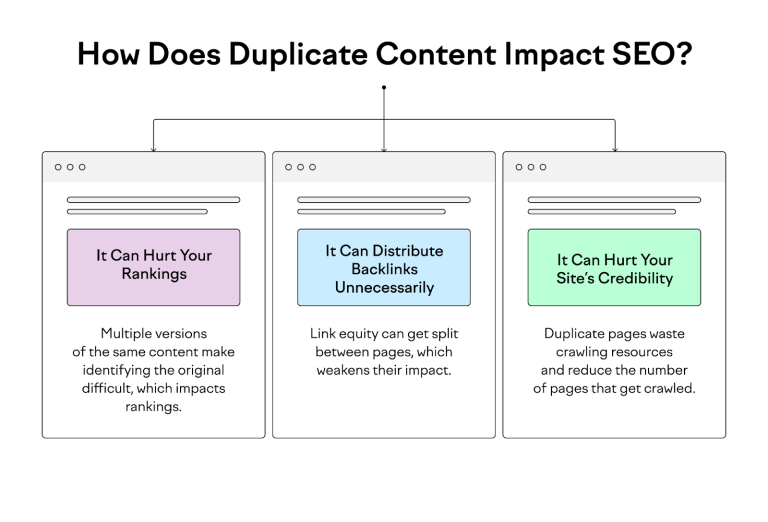

- Conduct regular technical SEO audits: Identify and fix crawlability issues like broken links, incorrect redirects, or duplicate content.

By implementing these strategies, you can ensure that your JavaScript content is crawlable and indexable by search engines, improving your website’s visibility and search engine ranking.

Understanding JavaScript and Crawling; How Search Engines Crawl JavaScript

Search engines have evolved to effectively crawl and index JavaScript-driven websites, but the process is more complex compared to traditional HTML pages. Here’s how search engines typically crawl JavaScript:

- Initial Fetching: When a search engine encounters a webpage URL, it initiates an initial fetch. This fetch retrieves the HTML content of the page.

- Rendering: If the HTML content contains JavaScript, the search engine may render the page using a headless browser. This means the search engine executes the JavaScript code to see the complete DOM (Document Object Model) structure that users would see in their browsers.

- Content Extraction: Once the rendering is complete, the search engine extracts the rendered HTML. This includes any content that was dynamically loaded or modified by JavaScript.

- Indexing: The extracted content is then processed and indexed by the search engine. This is where the content is analyzed and categorized for search queries.

Differences Between HTML and JavaScript Rendering

HTML content is straightforward for search engines to crawl and index because it is static and directly accessible. In contrast, JavaScript content often requires rendering on the client-side, meaning the content is generated dynamically after the initial HTML load.

Search engines must execute the JavaScript code to fully render the page, which can introduce delays and complications in indexing.

This difference can lead to issues where search engines struggle to see the same content as users.

JavaScript Crawling Impact on SEO

The primary SEO concern with JavaScript is that if search engines cannot effectively render and index the content, it may not appear in search results. This can lead to reduced visibility and traffic, negating the benefits of dynamic, interactive web experiences.

Common JavaScript SEO Issues

Delayed Content Loading

One of the main issues with JavaScript content is delayed loading times. Search engines operate within a limited timeframe to crawl a page, and if the content takes too long to load, it may not be indexed correctly.

Client-Side vs. Server-Side Rendering

Client-side rendering (CSR) relies on the browser to execute JavaScript and render the content, which can be problematic for search engines. Server-side rendering (SSR) involves generating the HTML content on the server before sending it to the browser, which can improve crawlability and indexing.

Poor User Experience

Poor implementation of JavaScript can lead to a subpar user experience, with slow loading times and content that is difficult to interact with. This not only affects SEO but can also lead to higher bounce rates and lower engagement.

Ensuring Effective Crawling of JavaScript Content

Server-Side Rendering (SSR)

SSR is a technique where the server generates the complete HTML for a page, including the JavaScript content, before sending it to the browser. This approach ensures that search engines receive fully rendered content, improving crawlability and indexing.

Dynamic Rendering

Dynamic rendering involves serving a static HTML version of your content to search engines while providing the full JavaScript experience to users. This method can be particularly effective for content-heavy websites that rely on JavaScript for interactive features.

Hybrid Rendering

Hybrid rendering combines the best of SSR and CSR, initially serving a server-rendered page and then using client-side rendering to enhance interactivity. This approach can provide a balance between SEO benefits and user experience.

Best Practices for JavaScript Content Management

Progressive Enhancement

Progressive enhancement involves building a basic version of your site using HTML and CSS, ensuring it is fully accessible without JavaScript. Additional JavaScript enhancements can then be layered on top, improving the user experience while maintaining crawlability.

Prerendering

Prerendering is the process of generating static HTML versions of your pages at build time. These pre-rendered pages can be served to search engines, ensuring that all content is accessible and indexable.

Using Rel=”canonical” Tags Correctly

Canonical tags help prevent duplicate content issues by indicating the preferred version of a page to search engines. When managing JavaScript content, it’s essential to use canonical tags correctly to ensure that the correct version of your content is indexed.

Successful JavaScript SEO Implementations

E-commerce Platforms

E-commerce sites often rely heavily on JavaScript for features such as dynamic product listings and interactive shopping carts. Implementing server-side rendering or dynamic rendering can help ensure that all product content is indexed, improving visibility in search results.

Content-Rich Websites

Content-rich websites, such as news portals and blogs, can benefit from JavaScript SEO by ensuring that all articles and multimedia content are fully rendered and indexed. This can be achieved through SSR or prerendering.

Interactive Applications

Interactive applications, including social media platforms and web-based tools, often face challenges with JavaScript SEO. Implementing hybrid rendering techniques can help balance the need for interactivity with the requirements of effective crawling and indexing.

Tools and Techniques for JavaScript SEO

Google Search Console

Google Search Console provides valuable insights into how Google crawls and indexes your JavaScript content. Using the “Inspect URL” tool, you can see how Google renders your pages and identify any issues that need to be addressed.

Lighthouse

Lighthouse is an open-source tool that audits web pages for performance, accessibility, and SEO. It provides actionable insights into how your JavaScript content impacts these areas and offers recommendations for improvement.

Fetch as Google

The “Fetch as Google” tool in Google Search Console allows you to see how Googlebot fetches and renders a URL on your site. This can help you identify rendering issues and ensure that your JavaScript content is being correctly indexed.

Puppeteer and Headless Chrome

Puppeteer is a Node library that provides a high-level API to control Chrome or Chromium over the DevTools Protocol. It allows you to programmatically render your JavaScript content and ensure it is accessible to search engines.

FAQs

What is JavaScript content? JavaScript content refers to the elements of a webpage that are created or modified using JavaScript. This can include dynamic content updates, interactive features, and single-page applications.

Why is JavaScript content challenging for SEO? JavaScript content can delay the loading of critical information, making it harder for search engines to crawl and index. If not managed properly, this can lead to incomplete indexing and lower search rankings.

How can I ensure that my JavaScript content is crawled effectively? Using server-side rendering, dynamic rendering, or pre-rendering can help ensure that search engines can access and index your JavaScript content. Regular testing and validation are also crucial.

What tools can help with managing JavaScript content for SEO? Tools like Google Search Console, Lighthouse, Chrome DevTools, and third-party services such as Screaming Frog SEO Spider can help test, analyze, and optimize your JavaScript content for better crawling and indexing.

What is server-side rendering (SSR)? Server-side rendering is a technique where JavaScript is executed on the server before the content is sent to the user’s browser. This ensures that the fully rendered content is available for search engine crawlers, improving SEO.

How does structured data work with JavaScript? Structured data can be implemented using JavaScript to provide search engines with detailed information about the content of a page. Ensuring that this structured data is rendered correctly and accessible to crawlers is essential for effective SEO.