Optimal Crawl Depth for Indexing

Optimal crawl depth in SEO involves keeping important pages within a few clicks from the homepage to ensure efficient indexing by search engines. This approach enhances site visibility and improves search engine rankings by balancing accessibility with effective site architecture.

Typically, keeping important pages within three clicks ensures efficient indexing by search engines like Google, thereby enhancing your site’s visibility and search engine rankings. Balancing accessibility with a well-structured site architecture is key to achieving optimal crawl depth and maximizing SEO performance..

Definition of Crawl Depth

Crawl depth is a measure of the distance between a specific page and the homepage of a website, quantified by the number of clicks needed to navigate from one to the other. This concept is pivotal in SEO as it influences how search engines like Google crawl and index a site.

If search engines find it challenging to reach your content due to excessive crawl depth, your site’s visibility in search results can suffer.

Understanding how search engines index websites is crucial for SEO success. One key factor in this process is crawl depth, which refers to the number of clicks it takes to reach a particular page from the home page.

Properly managing crawl depth ensures that your site’s content is effectively indexed, making it more accessible to users and improving your search engine rankings.

Importance of Crawl Depth in SEO

The significance of crawl depth in SEO cannot be overstated. It impacts how search engines discover and index new content. Pages that are buried deep within a website may be crawled less frequently, resulting in delayed indexing or, in some cases, no indexing at all.

This means your carefully crafted content may not reach its intended audience if not properly indexed.

Understanding Search Engine Crawling

How Search Engines Work

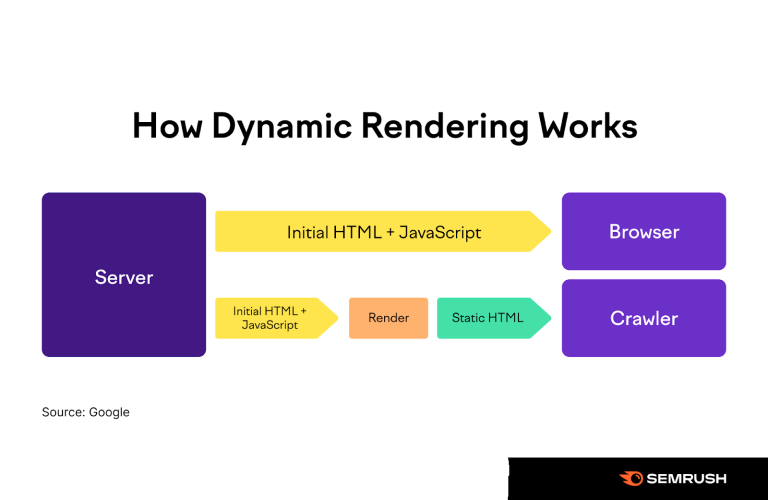

Search engines operate through a process known as crawling, where automated bots, also known as spiders or crawlers, visit web pages and follow links to discover new content. These crawlers then index the content, adding it to a vast database that powers search results.

Search Engine Crawlers: An Overview

Crawlers like Googlebot systematically browse the internet, starting with a list of known URLs and following hyperlinks to find new pages.

The efficiency of this process is influenced by the structure of your website and the crawl depth of your pages. Well-structured sites with optimal crawl depth allow crawlers to navigate and index content more effectively.

Indexing Process Explained

Once a crawler discovers a page, the content is analyzed and indexed based on relevance and quality. This indexed content is then used to generate search results for user queries.

Ensuring that your pages are easily accessible and not buried too deep within your site can enhance the efficiency of this process, leading to better visibility in search engine results pages (SERPs).

Factors Affecting Crawl Depth

Site Architecture

A well-organized site architecture is fundamental for optimal crawl depth. A flat architecture, where most pages are accessible within a few clicks from the homepage, is generally preferred over a deep, hierarchical structure. This ensures that all important pages are within reach of both users and search engine crawlers.

URL Structure

The structure of your URLs also plays a crucial role in crawl depth. Clean, descriptive URLs that avoid unnecessary parameters and subdirectories can make it easier for crawlers to navigate your site. Short and simple URLs are more likely to be indexed promptly compared to complex, lengthy ones.

Internal Linking

Effective internal linking helps distribute link equity throughout your site, making it easier for crawlers to discover and index your content. Links should be strategically placed to ensure that all important pages are reachable within a few clicks, thereby optimizing crawl depth.

Optimal Crawl Depth Explained

What is Optimal Crawl Depth?

Optimal crawl depth refers to the ideal number of clicks from the homepage required to reach any given page on your site. Generally, this is considered to be within three clicks. Keeping pages within this range ensures that crawlers can easily find and index all relevant content.

Ideal Number of Clicks from Home Page

While three clicks is a common benchmark, the optimal number can vary depending on the size and complexity of your site. For larger sites, ensuring that important pages are no more than three to four clicks away can strike a balance between accessibility and organizational depth.

Impact of Deep vs. Shallow Crawl Depth

Pros and Cons of Deep Crawl Depth

Deep crawl depth, where pages are several clicks away from the homepage, can organize content in a detailed, hierarchical manner. However, this can also mean that crawlers may miss or delay indexing deeper pages, potentially affecting visibility and ranking.

Benefits and Drawbacks of Shallow Crawl Depth

A shallow crawl depth, with most pages accessible within a few clicks, ensures that content is quickly and easily indexed. However, it can lead to a cluttered site structure, which might confuse users if not managed properly. Balancing crawl depth with user experience is key.

Best Practices for Managing Crawl Depth

Simplifying Site Navigation

Simplifying your site navigation is essential for managing crawl depth. Clear, concise menus and logical categorization help both users and search engines navigate your site efficiently. Avoiding overly complex dropdowns and multi-tiered structures can streamline the navigation process.

Improving Internal Linking

Regularly reviewing and enhancing internal linking is crucial for optimal crawl depth. Ensure that every important page is linked from multiple relevant pages, spreading link equity and making it easier for crawlers to discover and index content.

URL Parameters Optimization

Managing URL parameters is another important aspect of optimizing crawl depth. Parameters can confuse search engines and lead to duplicate content issues.

Implementing canonical tags and properly configuring URL parameters in Google Search Console can help mitigate these problems.

Crawl Budget Considerations

Understanding Crawl Budget

Crawl budget refers to the number of pages a search engine will crawl on your site within a given timeframe. This budget is influenced by the size of your site, the quality of your content, and the efficiency of your server. Optimizing crawl depth can help make the most of your crawl budget.

How to Optimize Crawl Budget?

To optimize your crawl budget, prioritize high-quality content and ensure that your site loads quickly. Regularly updating your sitemap and using tools like Google Search Console to monitor crawl stats can help you manage your crawl budget effectively.

Tools for Analyzing Crawl Depth

Google Search Console

Google Search Console is a vital tool for analyzing and optimizing crawl depth. It provides insights into how Google crawls your site, identifies indexing issues, and offers recommendations for improving crawl efficiency.

Screaming Frog SEO Spider

Screaming Frog SEO Spider is another powerful tool for analyzing crawl depth. It crawls your website, providing detailed reports on site architecture, URL structure, and internal linking, helping you identify areas for improvement.

Ahrefs Site Audit

Ahrefs Site Audit offers comprehensive crawl analysis, highlighting issues related to crawl depth, broken links, and more. This tool can help you understand how search engines view your site and guide you in making necessary adjustments.

Case Studies

Successful Examples of Optimal Crawl Depth

Examining case studies of websites that have successfully optimized their crawl depth can provide valuable insights. For instance, websites that restructured their navigation and improved internal linking often see significant improvements in indexing speed and search rankings.

Common Pitfalls and How to Avoid Them

Learning from common pitfalls in crawl depth management is equally important. Issues such as overly complex URL structures, poor internal linking, and excessive use of URL parameters can hinder crawl efficiency. Avoiding these mistakes can lead to better crawl and indexing outcomes.

Crawl Depth for Different Website Types

E-commerce Sites

E-commerce sites often have complex structures with numerous product pages. Ensuring that category and product pages are within three clicks of the homepage can enhance crawl efficiency and improve indexing for e-commerce sites.

Blogs and Content Sites

For blogs and content-rich sites, organizing content into clear categories and tagging posts effectively can help manage crawl depth. Ensuring that popular and recent posts are easily accessible can also aid in faster indexing.

Large Corporate Websites

Large corporate websites typically have extensive content and multiple sections. Implementing a well-thought-out site architecture and effective internal linking strategy is crucial for managing crawl depth and ensuring comprehensive indexing.

Advanced Tips for Optimizing Crawl Depth

Leveraging XML Sitemaps

XML sitemaps are a valuable tool for guiding search engines through your site. Ensuring that your sitemap is up-to-date and includes all important pages can help optimize crawl depth and improve indexing efficiency.

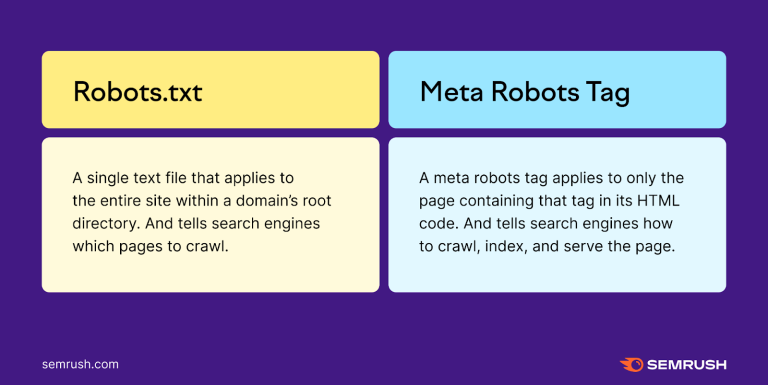

Using Robots.txt Effectively

Properly configuring your robots.txt file can control which parts of your site search engines crawl. Blocking unnecessary pages and directories can help focus crawlers on your most important content, optimizing your crawl budget.

Regularly Auditing Site Architecture

Conducting regular audits of your site architecture can help identify and address issues related to crawl depth. Tools like Screaming Frog and Ahrefs can assist in these audits, ensuring that your site remains well-structured and easily navigable.

Future Trends in Crawl Depth Optimization

AI and Machine Learning in Crawling

The future of crawl depth optimization lies in the advancements of AI and machine learning. These technologies can predict and prioritize pages for crawling, enhancing the efficiency and effectiveness of the indexing process.

Predictive Indexing Techniques

Predictive indexing, where search engines anticipate and index new content based on patterns and historical data, is another emerging trend. Staying updated with these technologies can give you an edge in managing crawl depth and improving SEO performance.

FAQs

What is crawl depth in SEO?

Crawl depth in SEO refers to the number of clicks required to reach a specific page from the homepage of a website. It affects how easily search engines can discover and index your content.

How does crawl depth affect indexing?

Crawl depth impacts indexing by determining how accessible your content is to search engine crawlers. Pages with excessive crawl depth may be crawled less frequently, leading to delayed or missed indexing.

What is an ideal crawl depth for my website?

An ideal crawl depth is generally considered to be within three clicks from the homepage. This ensures that all important pages are easily accessible to both users and search engine crawlers.

How can I reduce my website’s crawl depth?

You can reduce your website’s crawl depth by simplifying site navigation, improving internal linking, and optimizing your URL structure. Regularly auditing your site architecture can also help maintain optimal crawl depth.

What tools can help me analyze crawl depth?

Tools like Google Search Console, Screaming Frog SEO Spider, and Ahrefs Site Audit are excellent for analyzing crawl depth. They provide insights into site structure, internal linking, and indexing issues.

Can crawl depth impact my site’s SEO performance?

Yes, crawl depth can significantly impact your site’s SEO performance. Optimal crawl depth ensures that your content is efficiently indexed, improving visibility and ranking in search engine results.

Summary of Key Points

Crawl depth is a critical factor in SEO, influencing how search engines crawl and index your website. Optimal crawl depth ensures that your content is easily discoverable, improving your site’s visibility and search engine rankings.

Effective crawl depth management requires a combination of good site architecture, clean URL structures, and strategic internal linking. By following best practices and utilizing the right tools, you can ensure that your website is optimally indexed and performs well in search results.