How to Properly Use Robots.txt Files to Control Crawling

Properly configuring your robots.txt file is essential for controlling how search engines crawl and index your website.

Robots.txt files control website crawling by specifying which pages or directories web crawlers are allowed to access or block, thus influencing a site’s visibility and performance in search engines.

The robots.txt file is a critical tool for webmasters aiming to optimize their site’s accessibility and performance.

Understanding the nuances of this file is the first step towards leveraging its full potential.

Definition and Purpose of Robots.txt Files

A robots.txt file is a simple text file located in the root directory of a website. It serves as a guide for web crawlers, instructing them on which pages or sections of the site they are allowed to access and index.

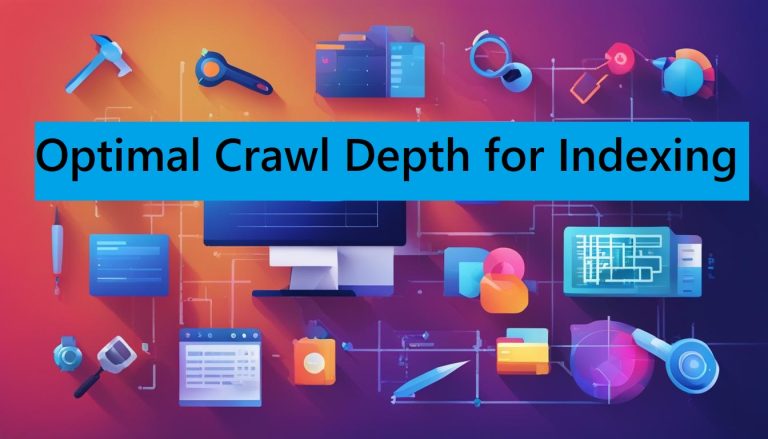

By controlling the behavior of these crawlers, webmasters can ensure that only the most relevant and important parts of their site are available to search engines, thus enhancing SEO efforts and preserving server resources.

History and Evolution of Robots.txt

The concept of robots.txt was introduced in 1994 by Martijn Koster, a pioneer in the development of web standards. The initial purpose was to provide a standard for web crawlers to avoid certain parts of a website, thus preventing server overload and protecting sensitive content.

Over the years, the specification has evolved, incorporating more sophisticated directives and accommodating the diverse needs of modern web management.

Creating a Robots.txt File

Creating an effective robots.txt file requires a solid understanding of its basic syntax and structure. This file uses a straightforward format, yet its implications for web crawling are profound.

Basic Syntax and Structure

A robots.txt file consists of one or more groups of directives, each group specifying which parts of the site can or cannot be accessed by different crawlers. Here is a simple example:

javascript

Copy code

User-agent: *

Disallow: /private/

Allow: /public/

In this example, all web crawlers (indicated by the asterisk) are disallowed from accessing the “private” directory but are allowed to access the “public” directory.

Common Directives

Several directives can be used to control crawler behavior effectively:

- User-agent: Specifies which crawler the directives apply to.

- Disallow: Blocks crawlers from accessing specified paths.

- Allow: Permits crawlers to access specific paths within disallowed sections.

- Sitemap: Provides the URL of the site’s XML sitemap, helping crawlers to discover content efficiently.

Implementing Robots.txt for SEO

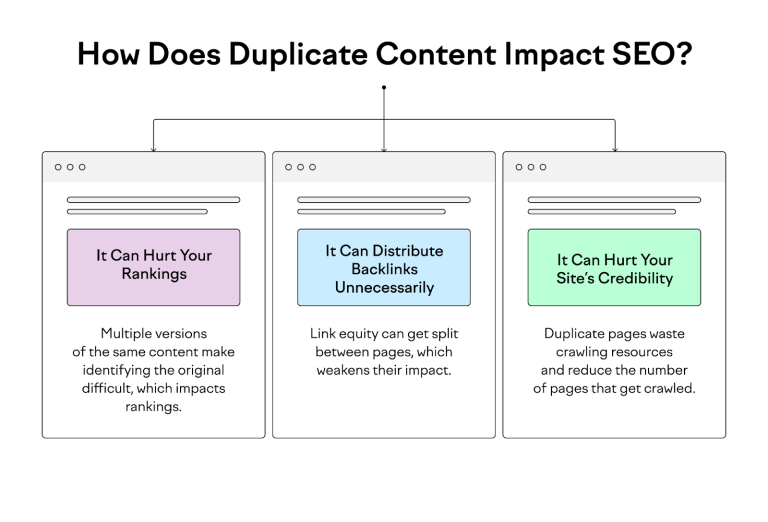

Proper implementation of robots.txt can significantly enhance a site’s SEO by ensuring that only the most valuable content is indexed, while irrelevant or duplicate content is excluded.

Allowing and Disallowing Crawlers

Strategically allowing and disallowing crawlers can help prioritize the indexing of high-value content. For instance, you might disallow access to admin pages, internal search results, and other non-public areas while ensuring that essential landing pages and blog posts are fully accessible.

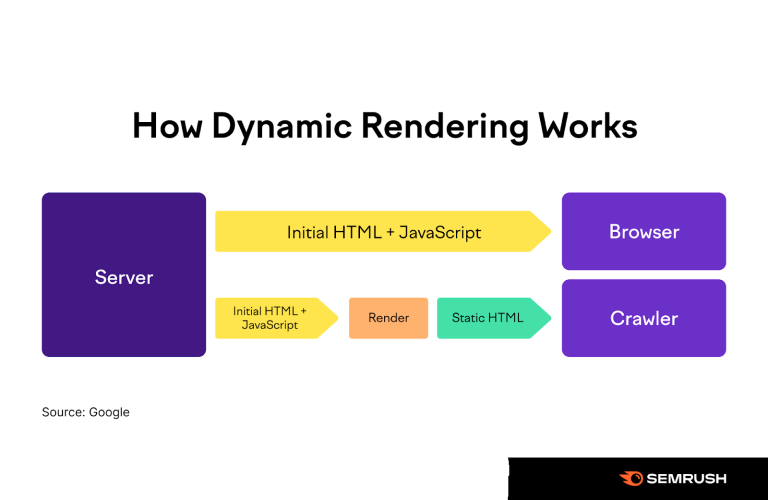

Handling Specific Bots

Different bots serve different purposes, and not all of them need access to your entire site. For example, you might want to allow Google’s web crawler full access while restricting lesser-known or potentially harmful bots. This can be achieved by specifying the User-agent in your directives:

makefile

Copy code

User-agent: Googlebot

Disallow:

User-agent: BadBot

Disallow: /

Advanced Robots.txt Techniques

For more complex sites, advanced techniques such as wildcards and regular expressions can be employed to fine-tune crawler access.

Utilizing Wildcards and Regular Expressions

Wildcards (e.g., *) and regular expressions allow for more flexible and powerful control over crawler behavior. For example:

javascript

Copy code

User-agent: *

Disallow: /tmp/*

This directive disallows all crawlers from accessing any directory or file within the /tmp/ directory.

Combining Directives Effectively

Combining multiple directives can create a robust and precise crawling strategy. For instance, you might allow certain bots access to specific directories while blocking them from others, ensuring optimal indexing and server performance.

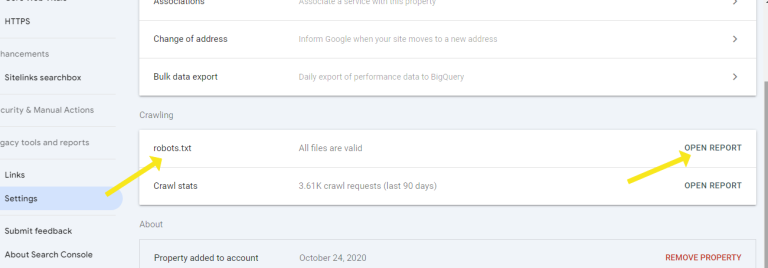

Testing and Validating Robots.txt

Once you’ve created or updated your robots.txt file, it’s crucial to test and validate it to ensure it behaves as expected.

Tools for Verification

Several tools are available for verifying the accuracy of your robots.txt file, including Google’s Robots Testing Tool. These tools allow you to simulate how different bots will interact with your directives, helping you identify and correct any issues before they impact your site.

Analyzing Crawling Behavior

Regular analysis of crawling behavior can provide insights into how effectively your robots.txt file is managing crawler access. This can be done through server logs and various SEO tools that track crawler activity, helping you refine your directives over time.

Best Practices for Robots.txt

Maintaining an effective robots.txt file requires ongoing attention to best practices and common pitfalls.

Keeping the File Updated

As your website evolves, so too should your robots.txt file. Regular updates ensure that new content is properly indexed and that outdated or irrelevant sections are excluded from crawling.

Avoiding Common Mistakes

Common mistakes in robots.txt files include syntax errors, overly broad directives, and neglecting to update the file as the site changes. By avoiding these pitfalls, you can maintain a more effective and efficient crawling strategy.

Frequently Asked Questions

What is the main function of robots.txt?

The primary function of robots.txt is to instruct web crawlers on which parts of a website they are allowed to access and index, thereby managing the site’s visibility and performance in search engines.

How can I prevent specific pages from being crawled?

To prevent specific pages from being crawled, use the Disallow directive in your robots.txt file, specifying the exact paths to the pages you want to block.

Can I block all crawlers using robots.txt?

Yes, you can block all crawlers by using the following directive:

makefile

Copy code

User-agent: *

Disallow: /

This tells all bots not to crawl any part of the website.

What are the limitations of robots.txt?

Robots.txt cannot enforce crawler behavior; it merely provides guidelines. Some bots may ignore the directives, particularly malicious ones. Additionally, it cannot prevent content from being indexed if it is linked to from other sites.

How do I allow Googlebot but disallow other crawlers?

To allow Googlebot while disallowing other crawlers, you can use the following directives:

makefile

Copy code

User-agent: Googlebot

Disallow:

User-agent: *

Disallow: /

What happens if there are errors in my robots.txt file?

Errors in your robots.txt file can lead to unintended consequences, such as blocking important pages from being indexed or allowing access to sensitive content. It’s crucial to test and validate the file to ensure it functions correctly.

Conclusion

Robots.txt is a powerful tool for webmasters aiming to control and optimize how their site is crawled by search engines. By understanding its syntax, implementing best practices, and regularly updating the file, you can enhance your site’s SEO performance and ensure that only the most valuable content is indexed.

Proper use of robots.txt not only conserves server resources but also protects sensitive areas of your site, contributing to a more efficient and effective web presence.